Generated by DALL-E

Introduction

How easy is it to cause a deep neural network to misclassify an image by modifying just one pixel? Surprisingly, it’s quite simple. An attacker can manipulate the network to return any desired answer.

Recently, I discovered the concept of the One-Pixel Attack on Deep Neural Networks. It’s fascinating how altering a single pixel can change the model’s output.

Understanding Deep Neural Networks

Deep neural networks are trained on datasets to optimize parameters, enabling them to generalize well on new data. Traditionally, neural networks find optimal parameters by minimizing the loss function using Gradient Descent. However, the One-Pixel Attack employs Differential Evolution (DE), a novel method for generating adversarial perturbations.

Why Differential Evolution Over Gradient Descent?

Gradient-based optimization techniques like Gradient Descent are computationally expensive due to the need to repeatedly evaluate derivatives of the loss function. Evolutionary Algorithms like Differential Evolution do not require evaluating derivatives, making them more efficient for complex and high-dimensional loss functions.

One-Pixel Attack: An Adversarial Threat

A one-pixel attack manipulates a machine learning model using minimally perceptible data to disrupt the model. This poses a high security risk as attackers can change one pixel to fool the network, producing incorrect output with higher confidence.

Attack Objective:

Change the image classification by altering the value of only one pixel.

Process:

- Select a pixel in the image.

- Change the color (RGB value) of that pixel.

- Evaluate the impact on the model’s prediction.

- Use differential evolution to optimize the pixel change that maximally confuses the classifier and increases misclassification confidence.

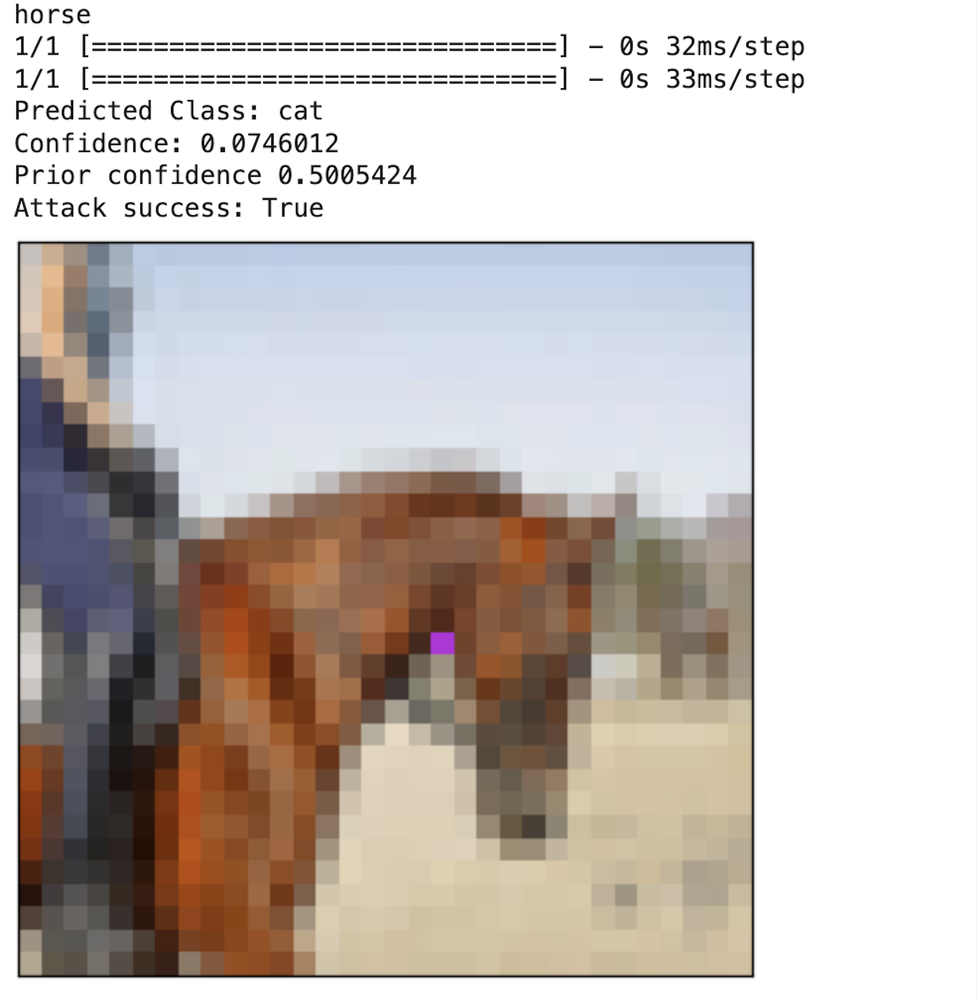

Image_1 – Output: Successful Image Misclassification by One-Pixel Attack

Effectiveness:

Despite the small change(Pink Pixel : ref Image_1), this attack can lead to significant misclassification, highlighting the sensitivity of neural networks to minor perturbations. The goal is to minimize the confidence in the correct classification and maximize the sum of the probabilities of all other categories.

Real-World Applications

- Autonomous Vehicles: Manipulating road signs to cause incorrect interpretations.

- Spam Filters: Bypassing email filters (containing images as attachments) by altering spam indicators.

- Biometric Authentication: Gaining unauthorized access by fooling facial recognition systems.

- Medical Image Analysis: Misleading diagnostic tools with altered medical images.

Preventive Approaches

- Adversarial Testing: Ensure validation and test sets include adversarial scenarios.

- Penetration Testing: Include adversarial attack scenarios in security evaluations to assess model resilience.

By understanding and preparing for such adversarial attacks, we can enhance the security and reliability of AI systems in critical applications.

References

- Research Paper

- Video: One-pixel Attack

- Medium: The One Pixel Attack: A Sneaky Threat in the Digital World

GitHub

Please find my repository here. I am keen on exploring the attack surface for better resolution images. If anyone is interested in collaboration, lets connect.

Leave a Reply